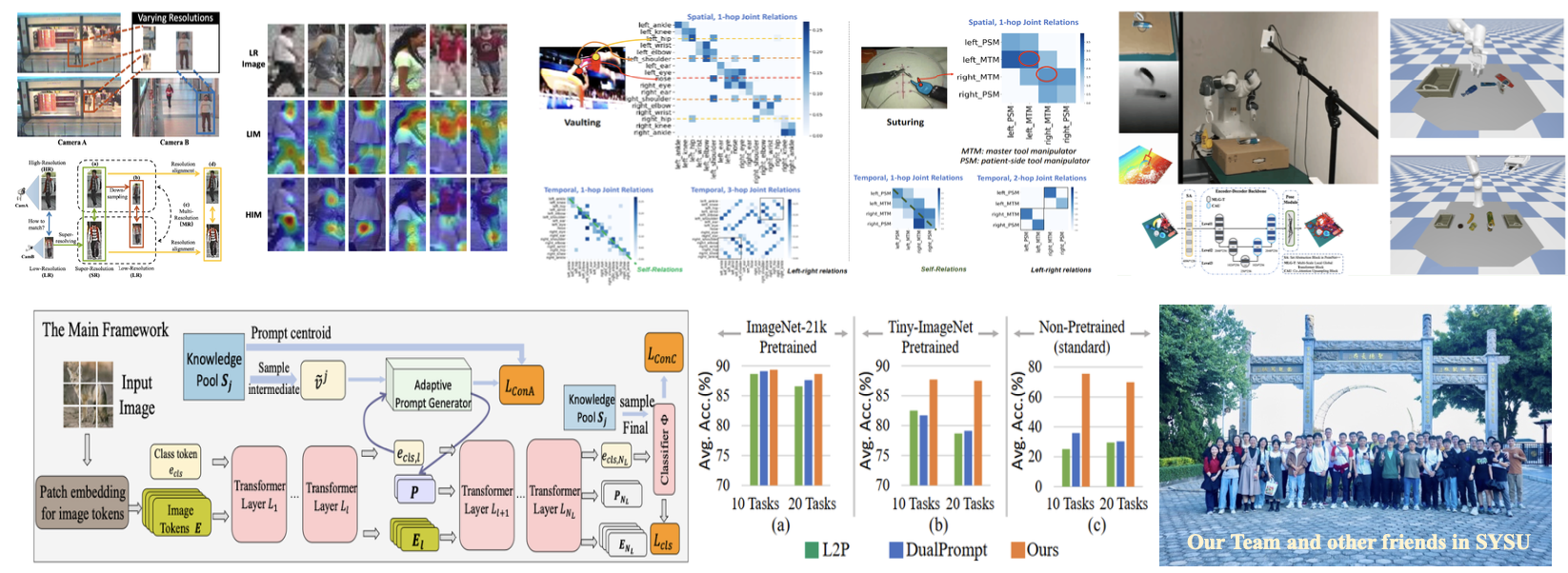

| Our groupÕs research focuses on human identification and activity recognition. We are also interested in developing large-scale machine learning methods in order to process large-scale data. [Person Re-identification] [Action

& Activity Recognition] [Face Recognition] [Large-scale Machine Learning] The

person re-identification is to match the same personÕs images captured at different space and different time across non-overlapping camera views in a visual surveillance system. Relative Distance Comparison: At an early stage, we first proposed a relative distance comparison model which is a soft discriminant model in order to alleviate the over-fitting problem due to the large variations of intra-class appearance across non-overlapping camera views.

Open-world Re-ID: Based on the relative comparison model, we further generalized its ability on processing the open-world person re-identification. In this work, we assume only a short list (i.e. watch list) of people is our concerns for tracking in a camera networks, while the others are actually imposters to the re-id system. We model this by developing a transfer local relative distance comparison, and our model can utilize source dataset to assist the re-id on a limited target data from the people on the watch list.

In addition to the open-world problem, we also consider a general re-id transfer problem for re-id in order to reduce the labeling cost when deploying the system in a new scenario.

Modeling view-specific transforms: In our research we find that the distribution of images of different views from the same group of people are different, and modeling view-specific transforms is useful to fully explore the feature characteristic of each view and learn a common feature space to align different viewsÕ data distribution as well.We have two recent works on this perspective. In TCSVT, we presented an asymmetric distance modeling on different views. In IJCAI 2005, we further proposed a more effective and easy-to-do view specific domain adaptation method.

Partial

Re-ID: Recently, we also consider more challenging re-id problems, including the partial re-id problem and the low resolution problem. Especially, the partial re-id addressed the partial observation of a person in a real-world crowded scenario.

Occlusion

& Low resolution: Recently, we also consider more challenging re-id problems, including the partial re-id problem and the low resolution problem. Especially, the partial re-id addressed the partial observation of a person in a real-world crowded scenario.

Deep

RE-ID: In WACV, we proposed a deep fusion neural networks in order to make deep neural networks learning complementary features to the hand-crafted features.

Context: We have ever developed transfer context learning for object and human detection. Context

is critical for minimising ambiguity in object detection. In this work, a novel context modelling framework is proposed without the need of any prior scene segmentation or context annotation. This is achieved by exploring a new polar geometric histogram descriptor for context representation. In order to quantify context, we formulate a new context risk function and a maximum margin context (MMC) model to solve the minimization problem of the risk function. Crucially, the usefulness and goodness of contextual information is evaluated directly and explicitly through a discriminant context inference method and a context confidence function, so that only reliable contextual information that is relevant to object detection is utilised.

Interestingly,

we have also explored the group context for assisting person re-identification. In a crowded public space, people often walk in groups, either with people they know or strangers. Associating a group of people over space and time can assist understanding individual's behaviours as it provides vital visual context for matching individuals within the group. Seemingly an `easier' task compared with person matching, this problem is in fact very challenging because a group of people can be highly non-rigid with changing relative position of people within the group and severe self-occlusions. For the first time, the problem of matching/associating groups of people over large space and time captured in multiple non-overlapping camera views is addressed by us. Specifically, a novel people group representation and a group matching algorithm are proposed. The former addresses changes in the relative positions of people in a group and the latter deals with variations in illumination and viewpoint across camera views. We also demonstrate a notable enhancement on individual Person matching by utilising the group description as visual context.

For this topic, we are interested in the interaction recognition, either between human and object or between human and human. Human-Object-Interaction

(HOI): The first work we did is to present an exemplar based HOI model in order to make the recognition system tolerant to inaccurate object detection.

Later, in order to alleviate the lighting impact and utilize heterogeneous features for achieving a more robust recognition, we developed a RGB-D based HOI methods by presenting a joint learning on heterogeneous features

Collective

Activity Recognition: For learning interaction between people in a group, we presented a graph-based interaction learning model.

Discriminant

subspace learning: There is some argument for principal component selection in PCA+LDA. This work shows small principal components (corresponding to small eigenvalues) are useful and should be carefully selected in PCA+LDA. A undation of principal component selection in LDA is established. New GA technique is used for implementation.

From 2003 to 2008, lots of work have shown that algorithms with (2D) matrix-based representation perform better than the traditional (1D) vector-based ones. Specially, 2D-LDA was widely reported to outperform 1D-LDA. However, would the matrix-based linear discriminant analysis be always superior and when would 1D-LDA be better? This work gives some impressive theoretical analysis and experimental comparison between 1D-LDA and 2D-LDA. Different from existing views, we find that there is no convinced evidence that 2D-LDA would always outperform 1D-LDA when the number of training samples for each class is small or when the number of discriminant features used is small.

In deriving the FisherÕs LDA formulation, there is an assumption that the class empirical mean is equal to its expectation. However, this may not be valid in practice and this problem has been rarely discussed before. From the "perturbation" perspective, we develop a new algorithm, called perturbation LDA (P-LDA), in which perturbation random vectors are introduced to learn the effect of the difference between the class empirical mean and its expectation in Fisher criterion.

Sparse

Feature Learning: NMF, which is a two-sided non-negativity based matrix factorization, is popular for extraction of sparse features. However, why non-negativity should be imposed on both components and coefficients? What is case if some constraint is released? In this work, we find releasing the non-negativity constraint on the coefficient term in NMF would help extract equally/much sparser and more reconstrutive components/features as compared to the two-sided non-negativity matrix factorization techniques. The exact 17 local components of Swimmer data set are successfully extracted for the first time (to our best knowledge).

We

present a sparse correntropy framework for computing robust sparse representations of face images for recognition. Compared with the state-of-the-art l1norm-based sparse representation classifier (SRC), which assumes that noise also has a sparse representation, our sparse algorithm is developed based on the maximum correntropy criterion, which is much more insensitive to outliers. In the proposed correntropy frameworks, several new methods have been developed for face recognition and object recognition.

VIS-NIR

Face Recognition: Visual versus near infrared (VIS-NIR) face image matching uses a NIR face image as the probe and conventional VIS face images as enrollment. Existing VIS-NIR techniques assume that during classifier learning, the VIS images of each target people have their NIR counterparts. However, since corresponding VIS-NIR image pairs of the same people are not always available. To address this problem, we propose a transductive method named transductive heterogeneous face matching (THFM) to adapt the VIS-NIR matching learned from training with available image pairs to all people in the target set. In addition, we propose a simple feature representation for effective VIS-NIR matching, which can be computed in three steps, namely Log-DoG filtering, local encoding, and uniform feature normalization, to reduce heterogeneities between VIS and NIR images. The transduction approach can reduce the domain difference due to heterogeneous data and learn the discriminative model for target people simultaneously.

Face Normalization: KPCA is a promising technique for nonlinear processing of images. A main problem in this approach is how to learn the pre-image of a kernel feature point in the input image space. However, it is always ill-posed. We present a regularized method and introduce the weakly supervised learning in order to alleviate this ill-posed estimation problem.

In solving the illumination problem for face recognition, most (if not all) existing methods either only use extracted small-scale features while discard large-scale features, or perform normalization on the whole image. In the latter case, small-scale features may be distorted when the large-scale features are modified. In this work, we argue that large-scale features of face image are important and contain useful information for face recognition as well as visual quality of normalized image. We suggest that illumination normalization should mainly perform on large-scale features of face image rather than the whole face image. A new framework is therefore developed.

Nowadays, we have more data to process. Recently, our group is working on 1) online classifier; 2) fast search; 3) large-scale clustering. For online classifier, we developed a locality sensitive online learning method for learn local hyperplanes jointly on a stream data

For fast search, we focus on developing hash models, which search similar thing in Hamming space. Our research goes from single modal hashing to cross modal hashing.

We are also interested in large scale clustering, where we have developed Euler clustering and fast competitive learning.

Jiansheng Wu (student), Wei-Shi Zheng*, Jian-Huang Lai. Approximate Kernel Competitive Learning. Neural Networks, pp. 117-132, 2015 [CODE]

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||